· 3 min read

Closing the AI Loop in Software Development

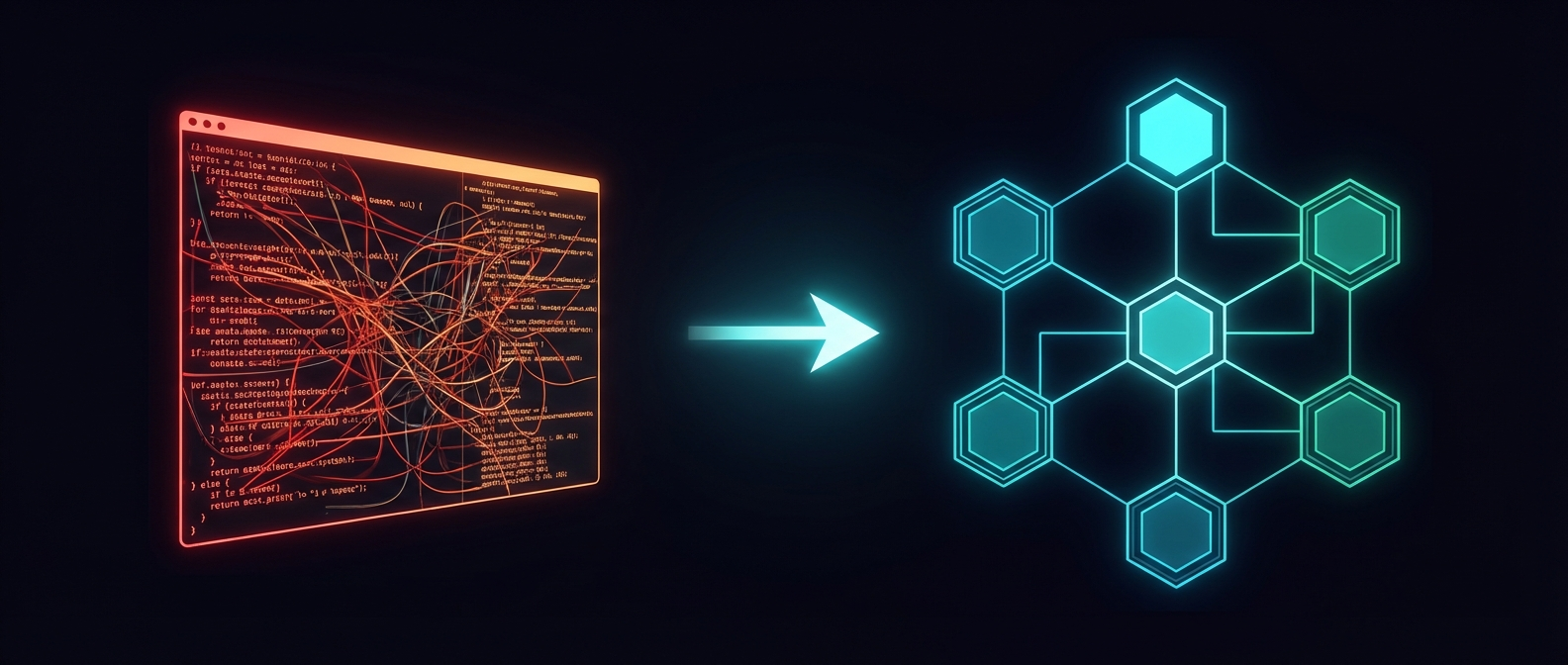

Lately I’ve been thinking hard about something that feels critical when you’re building with AI: 💥 you’re probably doing it wrong 💥

Me too, by the way. No judgment here.

Not because you don’t know how to prompt a model or write decent code, but because you’re not giving the agent the same capabilities a human developer has.

We want AI to understand, diagnose, and fix bugs, yet we keep it blind and deaf to the development environment.

When you close that loop, that’s when the magic happens: the agent stops acting like a clumsy assistant and becomes an autonomous developer that can spot and solve issues.

Here’s what it needs to get there. 👇

1️⃣ Access to the codebase

This is the foundation and the step everyone mentions: let the agent read and understand your code. The cleaner it is—with sound architecture, documentation, and coherent naming—the more effective the agent becomes.

No big mystery here, but it’s the base layer that supports everything else.

2️⃣ Access to persistence (your database)

Your model needs to understand what data lives in your database. A lot of bugs don’t live in the code—they’re hiding in the data it handles.

If you use a library like Prisma, tell the model. It knows how to parse the schema and query information.

If not, expose the data through an MCP or any other controlled interface.

Without access to the data, the agent is programming blindfolded.

3️⃣ Ability to call the API

Your model has to communicate with the backend, especially if what you’re building depends on remote services.

You can unlock this in several ways:

- Through the existing client code.

- With an intermediate CLI or MCP.

- Or with a well-defined JSON Schema or OpenAPI.

Even basic curl commands can work wonders if your API is well structured.

Again, the goal is for the agent to verify the real API responses and understand whether the bug lives there.

4️⃣ Seeing and interacting with the UI

If the AI generates UI code but can’t test it, you end up being its eyes, sending screenshots or logs back and forth.

That’s no longer necessary.

Today there are tools that let the agent interact with the interface on its own:

- Playwright or Chrome DevTools for web.

- Axe for iOS.

- ADB or mobilecli for Android.

With these, the AI can inspect the DOM, click around, and validate its own work.

When you combine all of this — code, data, API, and UI — you close the loop.

At that moment, your AI stops being a glorified autocomplete and becomes a real digital developer.

We dive deep into all of this (and much more) in the AI Expert program.

The November cohort is full, but we’ll open a new one in January.

👉 Learn more and reserve your spot here

What do you think? Are you already doing this in your projects?